- Edit | Preferences

- Store Tab

- uncheck Show iTunes in the Cloud purchases

My hobby notebook, infrequently used. This is primarily to clean off my desktop, but if someone else can make use of any of it, all the better...

Tuesday, April 22, 2014

iTunes 11 - Hiding Cloud Content

Sometimes you just don't want to see all of your online media; in those cases, open the iTunes 11 application and perform the following steps:

Monday, February 3, 2014

Oracle CLOBs with JDBC getString

In newer releases of Oracle, the preparedStatement.getString() is "overloaded" to allow you to pull back information directly from a CLOB field without using the special clob object. Its generally good up to around 32KB; In order to go larger, use the Java code below.

Generally speaking, this should not be used too frequently. In most instances, you'll want to stream the object properly, however, if the size of your strings are dancing on the edge of VARCHAR2 limits, you can get away with this workaround to simplify things.

String url = “jdbc:oracle:thin:@localhost:1521:orcl”;

String user = “scott”;

String password = “tiger”;

Properties props = new Properties();

props.put(“user”, user );

props.put(“password”, password);

props.put(“SetBigStringTryClob”, “true”);

DriverManager.registerDriver(new OracleDriver());

Connection conn = DriverManager.getConnection(url, props);

Generally speaking, this should not be used too frequently. In most instances, you'll want to stream the object properly, however, if the size of your strings are dancing on the edge of VARCHAR2 limits, you can get away with this workaround to simplify things.

Friday, January 31, 2014

Using AWS Role Credentials, Part III

The last remaining step in using AWS role credentials was to launch an EC2 instance using the role created in step 2, and deploy the test application built in step 1. Launching the instance via the console is done in the usual manner, with the addition of one very important thing; On step 3, be sure to apply the IAM role to your instance:

After the instance was launched, the test application was zipped and deployed to EC2 via:

Before running the application, the instance metadata was queried on the EC2 instance to validate that the role was applied and that temporary credentials were being generated:

which resulted in (partial snapshot):

Finally the test application was unzipped and run successfully:

As time allows I need to research the mechanism which generates the temporary credentials, and see how that affects code where objects are cached for an extended period of time (will the client always refresh the credentials before expiration via sdk, or must additional steps be taken to periodically refresh the credentials manually).

After the instance was launched, the test application was zipped and deployed to EC2 via:

psftp ec2-user@ec2-w-y-x-z.compute-1.amazonaws.com -i mykeys.ppk

Before running the application, the instance metadata was queried on the EC2 instance to validate that the role was applied and that temporary credentials were being generated:

curl -L 169.254.169.254/latest/meta-data/iam/security-credentials/EC2-READ-S3

which resulted in (partial snapshot):

Finally the test application was unzipped and run successfully:

As time allows I need to research the mechanism which generates the temporary credentials, and see how that affects code where objects are cached for an extended period of time (will the client always refresh the credentials before expiration via sdk, or must additional steps be taken to periodically refresh the credentials manually).

Thursday, January 30, 2014

Using AWS Role Credentials, Part II

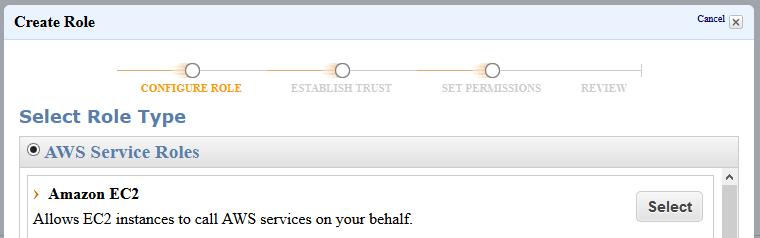

This entry documents the creation of the IAM role to support dynamic credentials in EC2. From the AWS Console, select the IAM application | Roles, then create a role as follows:

The highlighted portion can be redone in order to limit access to an individual bucket. The buckets are referenced by an ARN. You would think that "arn:aws:s3:::SPARETIMENOTEBOOK/*" would be sufficient, but as it turns out, it is not. This is documented elsewhere, but the best description I found was here. The modified "Resource" section of the policy is shown below.

After creating the role, it will appear in the list and be ready for use.

In the next entry, I will launch an EC2 instance using this role and run the test application.

Sunday, January 26, 2014

Using AWS Role Credentials, Part I

Due to available tinkering time, this process will be documented in a series of smaller blog entries...

Amazon AWS provides for a mechanism within EC2 to automatically generate API credentials tied to IAM roles. Basically, this is just a long-winded way to say that they've implemented something to replace embedded passwords (access key, secret key or AK/SK).

The first step in this process will be to generate a simple Java-based client which connects to S3 and lists the contents of a given bucket. This application will initially use an AwsCredentials.properties file to test locally. In Part II, we will remove the credentials file and deploy the application to a running EC2 instance using a role with S3 access to verify success.

The initial application file is listed below:

AwsCredentials.properties contains only the following:

with the obvious inclusion of the actual key values...

After compilation, the class was observed running as expected and displays the contents of the given S3 bucket. In the next part, I will create an EC2 role and deploy the application to a running instance to verify the code runs properly without the included credentials.

Amazon AWS provides for a mechanism within EC2 to automatically generate API credentials tied to IAM roles. Basically, this is just a long-winded way to say that they've implemented something to replace embedded passwords (access key, secret key or AK/SK).

The first step in this process will be to generate a simple Java-based client which connects to S3 and lists the contents of a given bucket. This application will initially use an AwsCredentials.properties file to test locally. In Part II, we will remove the credentials file and deploy the application to a running EC2 instance using a role with S3 access to verify success.

The initial application file is listed below:

package chris;

import com.amazonaws.auth.ClasspathPropertiesFileCredentialsProvider;

import com.amazonaws.regions.Region;

import com.amazonaws.regions.Regions;

import com.amazonaws.services.s3.AmazonS3;

import com.amazonaws.services.s3.AmazonS3Client;

import com.amazonaws.services.s3.model.ListObjectsRequest;

import com.amazonaws.services.s3.model.ObjectListing;

import com.amazonaws.services.s3.model.S3ObjectSummary;

public class Application

{

public void listObjects(AmazonS3 s3Client, String bucketName)

{

ListObjectsRequest listObjectsRequest = new ListObjectsRequest().withBucketName(bucketName);

ObjectListing objectListing = s3Client.listObjects(listObjectsRequest);

for (S3ObjectSummary objectSummary : objectListing.getObjectSummaries())

{

System.out.println(objectSummary.getKey() + " (size: " + objectSummary.getSize() + ")");

}

}

public void run()

{

Region region = Region.getRegion(Regions.US_EAST_1);

String bucketName = "SPARETIMENOTEBOOK";

try

{

// USE THE FOLLOWING LOCALLY WITH AWSCREDENTIALS.PROPERTIES FILE PRESENT

AmazonS3Client s3Client = new AmazonS3Client(new ClasspathPropertiesFileCredentialsProvider());

// USE THE FOLLOWING ON PRODUCTION EC2 INSTANCE

// AmazonS3Client s3Client = new AmazonS3Client();

s3Client.setRegion(region);

listObjects(s3Client, bucketName);

}

catch (Exception e)

{

e.printStackTrace();

}

}

public static void main(String[] args)

{

Application app = new Application();

app.run();

}

}

AwsCredentials.properties contains only the following:

secretKey=MY_SECRET_KEY_GOES_HERE

accessKey=MY_ACCESS_KEY_GOES_HERE

with the obvious inclusion of the actual key values...

After compilation, the class was observed running as expected and displays the contents of the given S3 bucket. In the next part, I will create an EC2 role and deploy the application to a running instance to verify the code runs properly without the included credentials.

Thursday, January 9, 2014

Breadboard Mk. II

Although the Mark I had served me well, it turned out to be a bit large for most of my needs. With a few more projects gearing up in 2014, I decided to exchange size for quantity, and built 3 smaller boards instead of the one larger unit.

The new foundation is a 6x9 polyethylene cutting board with two 840 point breadboards and a 60 point bus strip running across the top. Enough room is remaining on top to add binding posts at a later date, but over time I've found that I don't frequently use them, so I'll hold off on them for now. The Arduino Rev3 was also installed on a 6x9 board. This works well but could be improved...

Overall, I probably could have used a prefab board, but I find the heavier 1/2 cutting board material easier to hold and less prone to scratching my desktop, so worth an evening of easy assembly.

The new foundation is a 6x9 polyethylene cutting board with two 840 point breadboards and a 60 point bus strip running across the top. Enough room is remaining on top to add binding posts at a later date, but over time I've found that I don't frequently use them, so I'll hold off on them for now. The Arduino Rev3 was also installed on a 6x9 board. This works well but could be improved...

Overall, I probably could have used a prefab board, but I find the heavier 1/2 cutting board material easier to hold and less prone to scratching my desktop, so worth an evening of easy assembly.

Subscribe to:

Comments (Atom)